[back to digital matting]

A Bayesian Approach to Digital Matting

Yung-Yu Chuang1

Brian Curless1

David Salesin1,2

Richard Szeliski2

1University of Washington

2Microsoft Research

Abstract

This paper proposes a new Bayesian framework for solving the

matting problem, i.e. extracting a foreground element

from a background image by estimating an opacity for each pixel of

the foreground element. Our approach models both the foreground

and background color distributions with spatially-varying mixtures

of Gaussians, and assumes a fractional blending of the foreground

and background colors to produce the final output. It then uses a

maximum-likelihood criterion to estimate the optimal opacity,

foreground and background simultaneously. In addition to

providing a principled approach to the matting problem, our

algorithm effectively handles objects with intricate boundaries,

such as hair strands and fur, and provides an improvement over

existing techniques for these difficult cases.

Citation (bibTex)

Yung-Yu Chuang, Brian Curless, David H. Salesin, and Richard Szeliski.

A Bayesian Approach to Digital Matting.

In Proceedings of IEEE Computer Vision and Pattern Recognition (CVPR 2001),

Vol. II, 264-271, December 2001

Paper

CVPR 2001 paper (3.6MB PDF)

Addendum

- We forgot to mention one thing in the paper.

Because foreground and background samples are also observations from the camera,

they should have the same noise characteristics as the observation C.

Hence, we added the same amount of camera variance \sigmac to the covariance

matrices of foreground and background samples in Equation (7).

We used eigen-analysis to find the orientation of the covariance matrix

and added \sigmac2 in every axis.

That is, we decomposed \SigmaF as U S VT.

Let S=diag{s12,s22,s32},

we set S'=diag(s12+\sigmac2,

s22+\sigmac2,

s32+\sigmac2)

and assign the new \Sigma_F as U S' VT.

By doing so, we also avoided most of the degenerate cases, i.e., non-invertible matrices.

- For the window for collecting foreground and background samples, we set a

minimal window size and a minimal number of samples. We start from a window

with the minimal window sizw. If such a window does not give us enough samples,

we gradually increase the window until the minimal number of samples is

satistified. Note that, in this way, the windows for background and foreground

might end up with different sizes.

Results

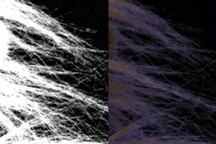

Inputs, Masks and Composites

|

| Blue-screen matting

| Difference matting

| Natural image matting

|

| Input

|

|

|

|

|

| Segmentation

|

|

|

|

|

Composite

(Bayesian)

|

|

|

|

|

Lighthouse image and background images used in composite courtesy

Philip Greenspun, http://philip.greenspun.com.

Woman image was obtained from Corel Knockout's tutorial, Copyright

© 2001 Yung-Yu Chuang, Brian Curless, David Salesin, Richard Szeliski and its licensors Corel. All rights reserved.

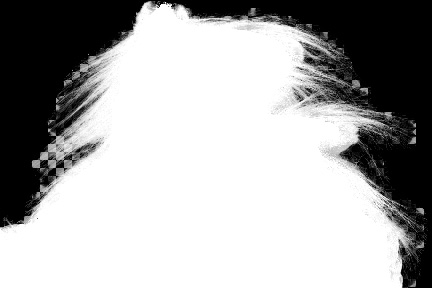

Blue-screen Matting

|

| Alpha Matte

| Composite (black)

| Inset

| Composite

|

| Mishima

|

|

|

|

|

| Bayesian

|

|

|

|

| Ground truth

|

|

|

|

| |

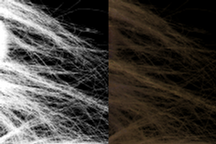

"Synthetic" Natural Image Matting

|

| Alpha Matte

| Composite

| Inset

|

Difference

Matting

|

|

|

|

| Knockout

|

|

|

|

Ruzon &

Tomasi

|

|

|

|

| Bayesian

|

|

|

|

Ground

Truth

|

|

|

|

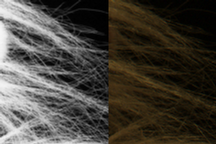

Natural Image Matting

|

| Alpha Matte

| Composite

| Inset

| Alpha Matte

| Composite

| Inset

|

| Knockout

|

|

|

|

|

|

|

Ruzon &

Tomasi

|

|

|

|

|

|

|

| Bayesian

|

|

|

|

|

|

|

Additional results

The first two images courtesy Philip Greenspun, http://philip.greenspun.com.

Woman image was obtained from Corel Knockout's tutorial.

cyy -a-t- cs.washington.edu